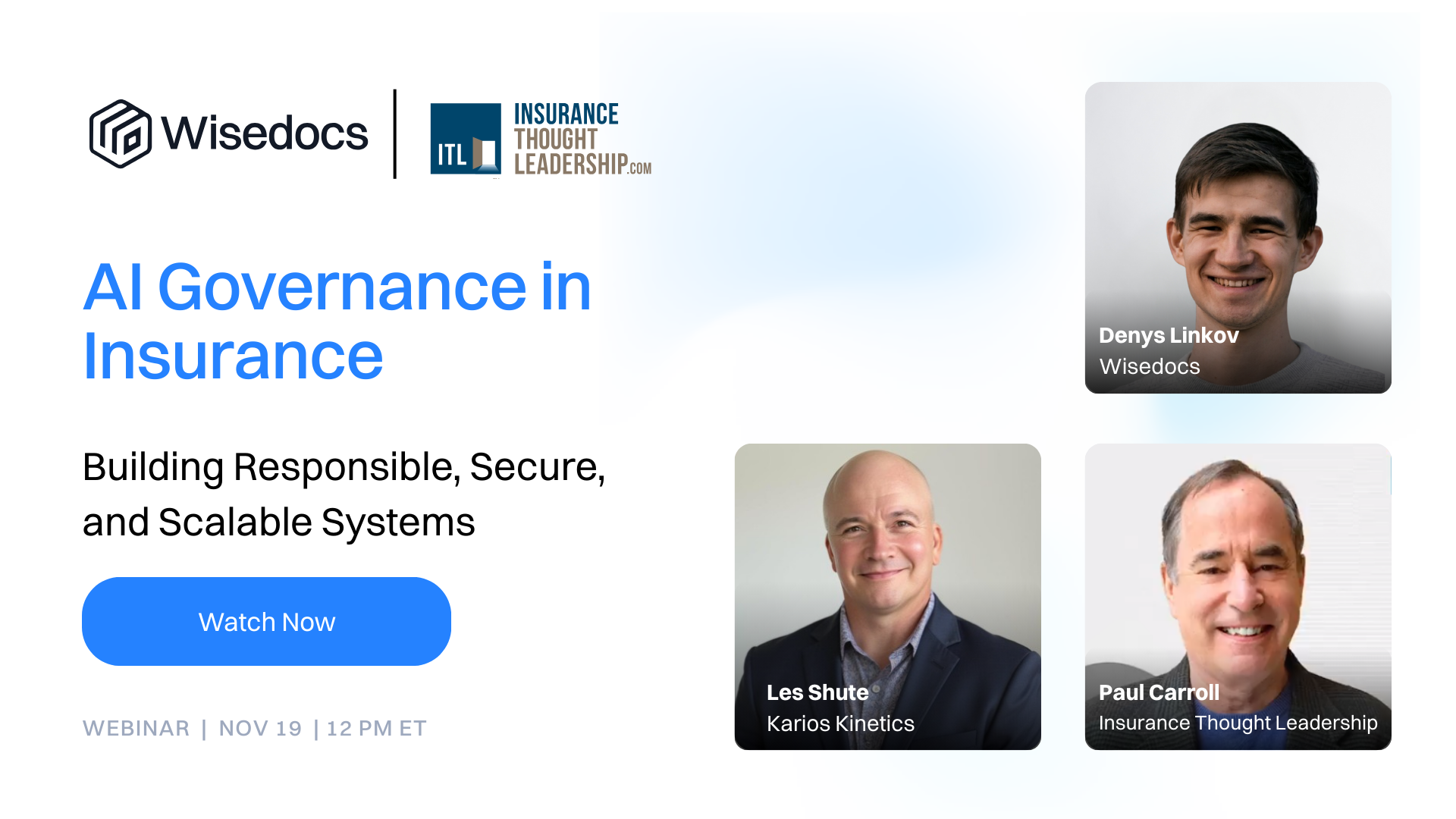

Artificial intelligence is reshaping insurance claims faster than ever, but breakthrough innovation also demands responsible governance. In a recent webinar sponsored by Wisedocs and hosted by Insurance Thought Leadership, three industry leaders – Denys Linkov (Head of ML at Wisedocs), Les Shute (President of Kairos Kinetics), and Paul Carroll (Editor-in-Chief at Insurance Thought Leadership) – tackled the challenge of scaling AI from proof of concept to production while maintaining security, compliance, and trust.

Why AI Governance Matters

The insurance industry stands at a vital crossroads. As Linkov warns, “The biggest risk [in AI governance] is taking something shiny and putting it in the forefront of your technological output.” When ChatGPT launched in late 2022, the 2023 rush to deploy led to instances hooked up to websites that hallucinated promises of customer refunds and produced work that companies had to retract. His message was emphatic: “The important thing is to really understand what you learned over the past 30,40,50 years as an industry in the technology space and adapt that to GenAI with domain expertise, providing the guidance with good technology practices, good security practices.”

Shute drew a crucial distinction: “AI is nothing new, Generative AI is new.” While traditional AI focused on predicting outcomes from large datasets, Generative AI creates entirely new content and insights. “It is a fundamentally different technology than what we’re used to. It bends the brain a little bit, in order to do things correctly, because in some respects you have to unlearn what you’ve learned in the past by working with other AI systems.” This shift demands rethinking automation and augmentation processes while building new safeguards. As Linkov noted, with ChatGPT only three years old and API keys making content creation accessible to many, “we have to rethink how governance is done because creating content is much easier now, but actually curating it and putting the right product into our customers’ hands is much different.”

Infrastructure and Security are the Foundation of Trust

When it comes to deploying AI responsibility, the speakers agreed that foundational security practices remain paramount. Cloud transformation over the past 15 years has taught valuable lessons about single sign-on, multi-factor authentication, and granular permissions, all of which apply directly to AI systems. However, AI introduces a unique challenge: non-deterministic behavior that standard IT systems aren’t designed to handle.

For carriers evaluating AI vendors, Shute was direct: “Anybody who’s looking to work with a vendor, SaaS or otherwise, you should have a plan in place for properly vetting vendors, especially if you’re seeking to purchase or partner with somebody on a Generative AI product.” Organizations must understand how partners manage data, protect privacy, architect their systems, and align with business goals. He emphasized the importance of trust: “Make sure you are not cutting corners when it comes to compliance and trust. You have to make sure you can trust who you’re working with to actually produce what they say they’re going to do and do so in a way that protects your hide and manages your own risks.”

Linkov reinforced this message, urging carriers to understand relevant regulations – from HIPAA for medical documents to NIST security standards – and demand transparency from their technology partners. At Wisedocs, this means validating medical summary AI and AI medical chronologies through expert-in-the-loop systems to catch potential hallucinations before they reach adjusters.

Scaling with Human Oversight

Moving AI from proof of concept to production involves far more than technical readiness. According to recent MIT research discussed in the webinar, AI systems can now perform work equivalent to nearly 12% of U.S. jobs, representing about 151 million workers and approximately $1.2 trillion in wages. But as the key speakers emphasized, this doesn’t mean eliminating human judgment, especially in decisions affecting people’s livelihoods.

Human-in-the-loop (HITL) processes emerged as a central theme. Shute was unequivocal: “Human judgment is particularly important when it comes to things that affect humans. If you’ve got an AI system that affects a human’s livelihood, such as a resume screening system or medical records summarization, you must have a human overseeing these decisions.” The speakers recommended establishing governance councils with stakeholders from all potentially impacted areas.

Linkov explained that HITL systems also enable organizations to measure model accuracy in context. While 99% accuracy might be acceptable in some applications, that 1% gap could be catastrophic in others. “The best use case right now is using AI for augmentation as a copilot,” he advised. He also emphasized that “there has to be a comprehensive process, and people need to align on it. If there are milestones to hit earlier on, it can’t be security, governance, and legal just doing a checkmark at the end, that won’t work; these systems are complex; they need to be brought in at the beginning.”

The Wisedocs Approach

Throughout the discussion, Wisedocs’ approach exemplified the principles being discussed. The company combines domain expertise with rigorous technology practices, validates outputs through expert review, and maintains transparency with enterprise partners about model capabilities and limitations. This commitment to responsible AI governance, paired with insurance industry knowledge and HIPAA-compliant, SOC 2-certified infrastructure, positions Wisedocs as a model for how enterprises can scale while maintaining trust.

Ready to learn more? Watch the full on-demand webinar to hear detailed strategies for building responsible AI governance frameworks that protect your organization while unlocking the potential of artificial intelligence in claims processing.

.png)